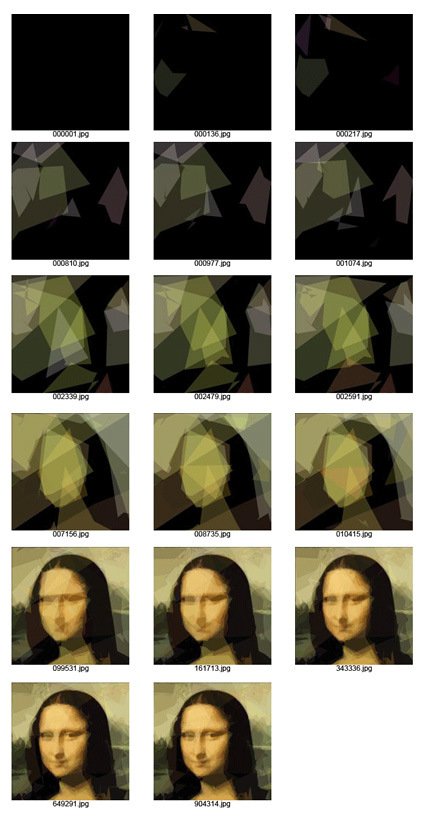

Back in school I used to have a professor who taught us about design+genetics (and called it Geno-metrics). The central thesis was for designers to move away from the role of designing the object to designing the parameters/rules in which the object can exist. In a one-semester exposure this was nothing much more than programming parametric CAD software to churn out hundreds of designs based on a series of randomly varying dimensions (within reasonable bounds).

So we were supposed to find an object, program a range for a core set of dimensions, and let it be randomized within these bounds. Due to the ‘law’ within the programming, the outcome is bound to be varied and yet have identifiable ‘genes’. For instance, here are some stool designs (not necessarily valid) that were executed by the computer:

I remember the majority of the class balked at the idea. Some of the reasons include:

“So what does that make me? I’m here to learn design – if the computer does everything, then what’s the point?” – the same was said for a lot of other things that are taken-for-granted design tools for designers nowadays too.

“How can the computer make good designs – it has no brains/intelligence?” – Well maybe not in 100 iterations – but what about in 1000? 1 million? 1 billion?

I was somewhat sceptical too, but the idea of ‘genes’ captured my imagination. The idea that you can boil a cacophonic, complex external object (or even systems), and distill it into its essence with just a few variables. However, the shortcomings of the above exercise lied in the fact that at the end of the day, the judgement for ‘good design’ is subjective and human. This readily makes the computer seem incompetent.

A contrasting case-in-point:

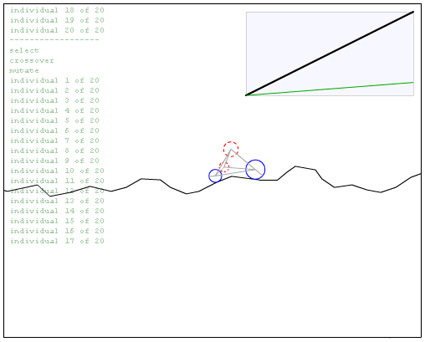

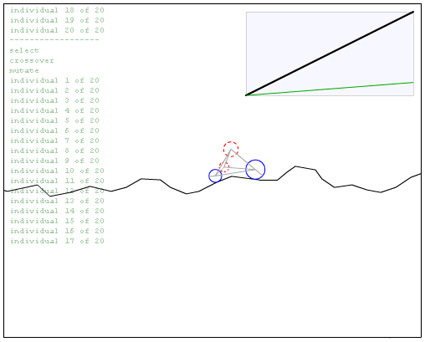

This was a Flash program by Matthew where a primitive car design is iterated by computer. The (objective) aim of the car (that defines whether it’s a good design or not) is the length of treacherous terrain it can go pass before crashing. The variables are the size and initial positions of the 4 circles, the length, spring constant and damping of the 8 springs.

If you let it run, you’d see that as it crashes, it reboots and tries to refine the design again, and through time, the design gets improved without further manual input.

Here is the difference – with a quantifiable, objective feedback to the success of a design, computers can automate and rapidly refine designs (very likely) better than a human can. If the evaluation is subjective, however, the process becomes ineffective or slows down by orders of magnitude.

In a landscape where we are increasingly talking about user-generated content, democratic design and increased semantics intelligence for computers, this may become more relevant. There are already web-advertisements that modify its own designs (font size, colors, images, etc.) on-the-fly based on real time feedback on click-through-rates.

How/where else can this be applied?